- ES6 Interview Questions and Answers 2024

ES6 interview questions, Top Es6 Interview Questions

Are you a qualified ECMAScript programmer who works on client-side scripting on the internet? If yes, then more training in ECMAScript 6 will certainly help you to crack ES6 interview questions and open the door to more opportunities for you. As an ECMAScript 6 developer, you will be able to use a new syntax to write more complex applications that involve various classes and modules.

With features such as arrow functions, typed arrays, and math improvements, you can train yourself to build larger applications as well as libraries. IndiaHires will provide you all the information as to how you can crack ES6 interview questions and answers 2024

ES6 interview questions list

- What is ES6?Define ES6 and mention the new features of ES6?

- Could you explain the difference between ES5 and ES6?

- Explain about Internationalization and localization?

- What is IIFEs (Immediately Invoked Function Expressions)?

- When should I use Arrow functions in ES6?

- What are the differences between ES6 class and ES5 function constructors?

- Why do we need to use ES6 classes?

- What is Hoisting in JavaScript?

- What is the motivation for bringing Symbols to ES6?

- What are the advantages of using spread syntax in ES6, and how is it different from rest syntax?

- Explain the Prototype Design Pattern?

- What is the difference between .call and .apply?

- What are the actual uses of ES6 WeakMap?

- What is the difference between ES6 Map and WeakMap?

- How to “deep-freeze” object in JavaScript?

- What is the preferred syntax for defining enums in JavaScript?

- Explain the difference between Object.freeze() vs const?

- When are you not supposed to use the arrow functions in ES6? Name three or more cases.

- Can you give an example of a curry function, and why does this syntax have an advantage?

- What are the template literals in ES6?

- What do you understand by Generator function?

Below are the new features listed

- Constants (Immutable variables)

- Scoping

- Arrow functions

- Extended parameter handling

- Template literals

- Extended literals

- Modules

- Classes

- Enhanced Regular expressions

- Enhanced object properties.

- Destructuring Assignment

- Symbol Type

- Iterators

- Generator

- Map/Set & WeakMap/WeakSet

- Typed Arrays

- Built-in Methods

- Promises

- Meta programming

- Internationalization and Localization.

ECMAScript 5 (ES5):

The 5th edition of ECMAScript, standardized in 2009. This standard has been implemented fairly completely in all modern browsers

ECMAScript 6 (ES6)/ ECMAScript 2015 (ES2015):

The 6th edition of ECMAScript, standardized in 2015. This standard has been partially implemented in most modern browsers.

Here are some key differences between ES5 and ES6:

-

Arrow functions & string interpolation:

const greetings = (name) => { return `hello ${name}`; } const greetings = name => `hello ${name}`; -

Const

Const works like a constant in other languages in many ways but there are some caveats. Const stands for ‘constant reference’ to a value. So with const, you can actually mutate the properties of an object being referenced by the variable. You just can’t change the reference itself.

const NAMES = []; NAMES.push("Jim"); console.log(NAMES.length === 1); // true NAMES = ["Steve", "John"]; // error -

Block-scoped variables

The new ES6 keyword

letallows developers to scope variables at the block level.Letdoesn’t hoist in the same wayvardoes. -

Default parameter values

Default parameters allow us to initialize functions with default values. A default is used when an argument is either omitted or undefined — meaning null is a valid value

// Basic syntax function multiply (a, b = 2) { return a * b; } multiply(5); // 10 -

Class Definition and Inheritance

ES6 introduces language support for classes (

classkeyword), constructors (constructorkeyword), and theextendkeyword for inheritance. -

for-of operator

The for…of statement creates a loop iterating over iterable objects

-

Spread Operator

For objects merging

const obj1 = { a: 1, b: 2 } const obj2 = { a: 2, c: 3, d: 4} const obj3 = {...obj1, ...obj2} -

Promises

Promises provide a mechanism to handle the results and errors from asynchronous operations. You can accomplish the same thing with callbacks, but promises provide improved readability via method chaining and succinct error handling.

const isGreater = (a, b) => { return new Promise ((resolve, reject) => { if(a > b) { resolve(true) } else { reject(false) } }) } isGreater(1, 2) .then(result => { console.log('greater') }) .catch(result => { console.log('smaller') }) -

Modules exporting & importing

const myModule = { x: 1, y: () => { console.log('This is ES5') }} export default myModule;import myModule from './myModule';

These are APIs that are standard JavaScript APIs that help with different tasks such as collation, number formatting, currency formatting, date and time formatting.

-

Collation

It is used to search within a set of strings and sort a set of strings. It is parametrized by the locale and Unicode-conscious.

-

Number Formatting

Numbers can be formatted with localized separators and digit groupings. Other things that include style formatting, numbering, percent, and precision.

-

Currency formatting

Numbers can be formatted mainly with currency symbols, with localized separators and digit groupings.

-

Date and time formatting

It has been formatted and ordered with localized separators. The format can be short, long and other parameters, such as the locale and time zone.

It’s an Immediately Invoked Function Expression, or an IIFE for short. It will run immediately after it has been created:

(function IIFE(){ console.log( "Hello!" ); })(); // "Hello!"This pattern is often used when trying to avoid contaminating the global namespace, because all variables used inside the IIFE (like any other normal function) are not visible outside its scope.

I am now using the following thumb rule for functions in ES6 and beyond:

- Use

functionin the global scope and for Object.prototype properties. - Use

classfor object constructors. - Use

=>everywhere else.

Why use arrow functions almost everywhere?

-

Scope safety

When arrow functions are used consistently, everything is guaranteed to use the same object as the root. If even a single standard function callback is mixed in with a bunch of arrow functions, there’s a chance that the scope will get messed up.

-

Compactness

The Arrow functions are easier to read and write to. (This may seem to be an opinion, so I’m going to give a few more examples).

-

Clarity

When almost everything is an arrow function, any regular function immediately sticks out to define the scope. The developer can always look up the next-higher function statement to see what the object is.

Let’s first look at example of each:

// ES5 Function Constructor function Person(name) { this.name = name; } // ES6 Class class Person { constructor(name) { this.name = name; } }For simple constructors, they look pretty similar.

The main difference in the constructor comes when using inheritance. If we want to create a

Studentclass that subclassesPersonand add astudentIdfield, this is what we have to do in addition to the above.// ES5 Function Constructor function Student(name, studentId) { // Call constructor of superclass to initialize superclass-derived members. Person.call(this, name); // Initialize subclass's own members. this.studentId = studentId; } Student.prototype = Object.create(Person.prototype); Student.prototype.constructor = Student; // ES6 Class class Student extends Person { constructor(name, studentId) { super(name); this.studentId = studentId; } }Some of the reasons you might choose to use Classes:

- Syntax is simpler and less error prone.

- It’s much easier (and again, less error-prone) to set up inheritance hierarchies using the new syntax than the old one.

Classdefends you from the common error of not using anewconstructor function (by having the constructor throw an exception ifthisis not a valid constructor object).- Calling the parent prototype version of the method is much simpler with a new syntax than the old one (

super.method()instead ofParentConstructor.prototype.method.call(this)orObject.getPrototypeOf(Object.getPrototypeOf(this)).

Consider:

/ **ES5** var Person = function(first, last) { if (!(this instanceof Person)) { throw new Error("Person is a constructor function, use new with it"); } this.first = first; this.last = last; }; Person.prototype.personMethod = function() { return "Result from personMethod: this.first = " + this.first + ", this.last = " + this.last; }; var Employee = function(first, last, position) { if (!(this instanceof Employee)) { throw new Error("Employee is a constructor function, use new with it"); } Person.call(this, first, last); this.position = position; }; Employee.prototype = Object.create(Person.prototype); Employee.prototype.constructor = Employee; Employee.prototype.personMethod = function() { var result = Person.prototype.personMethod.call(this); return result + ", this.position = " + this.position; }; Employee.prototype.employeeMethod = function() { // ... };In ES6 classes

// ***ES2015+** class Person { constructor(first, last) { this.first = first; this.last = last; } personMethod() { // ... } } class Employee extends Person { constructor(first, last, position) { super(first, last); this.position = position; } employeeMethod() { // ... } }Hoisting is the action of the JavaScript interpreter to move all the variables and function declarations to the top of the current scope. There are two types of hoists:

- variable hoisting – rare

- function hoisting – more common

Where a var (or function declaration) appears within the scope of the declaration, it shall be taken to belong to the whole scope and shall be accessible everywhere.

var a = 2; foo(); // works because `foo()` // declaration is "hoisted" function foo() { a = 3; console.log( a ); // 3 var a; // declaration is "hoisted" // to the top of `foo()` } console.log( a ); // 221 React JS Interview Questions and Answers

Symbolsare a new, special type of object that can be used as a unique property name in objects. UsingSymbolinstead ofstringallows different modules to create properties that do not conflict with each other.Symbolscan also be made private, so that no one who does not already have direct access to theSymbolcan access their properties.Symbolsare a new primitive thing. Just like thenumber,string, andbooleanprimitives, thesymbolhas a function that can be used to create them. Unlike other primitives, thesymbolsdo not have a literal syntax (e.g. how string have'') “-the only way to create them is with the symbol constructor in the following way:let symbol = Symbol();In reality,

Symbol'sis just a slightly different way of attaching properties to an object-you could easily provide well-knownSymbolsas standard methods, just likeObject.prototype.hasOwnProperty, which appears in everything that inherits fromObject.ES6 spread syntax is very useful when coding in a functional paradigm as you can easily create copies of arrays or objects without using

Object.create,slice, or a library function. This language feature is often used in Redux and rx.js projects.function putDookieInAnyArray(arr) { return [...arr, 'dookie']; } const result = putDookieInAnyArray(['I', 'really', "don't", 'like']); // ["I", "really", "don't", "like", "dookie"] const person = { name: 'Todd', age: 29, }; const copyOfTodd = { ...person };The rest syntax of ES6 offers a shorthand for including an arbitrary number of arguments to be passed to a function. It’s like the inverse of the spread syntax, taking data and stuffing it into an array rather than unpacking array of data, and it works in function arguments, as well as in array and object destructuring assignments.

function addFiveToABunchOfNumbers(...numbers) { return numbers.map(x => x + 5); } const result = addFiveToABunchOfNumbers(4, 5, 6, 7, 8, 9, 10); // [9, 10, 11, 12, 13, 14, 15] const [a, b, ...rest] = [1, 2, 3, 4]; // a: 1, b: 2, rest: [3, 4] const { e, f, ...others } = { e: 1, f: 2, g: 3, h: 4, }; // e: 1, f: 2, others: { g: 3, h: 4 }The Prototype Pattern creates new objects, but instead of creating non-initialized objects, it returns objects that are initialized with values copied from a prototype-or sample-object. The pattern of the prototype is also referred to as the pattern of the properties.

An example of where the Prototype pattern is useful is the initialization of business objects with values that match the default values of the database. The prototype object holds the default values that are copied to the newly created business object.

Classical languages rarely use the Prototype pattern, but the JavaScript being a prototype language uses this pattern in the construction of new objects and their prototypes.

Both

.calland.applyare used to invoke functions, and the first parameter will be used as the value ofthiswithin the function. However,.calltakes the comma-separated arguments as the next arguments, while.applyuses the array of arguments as the next argument. An easy way to remember this is C for acalland a comma-separated and A forapplyand an array of arguments.function add(a, b) { return a + b; } console.log(add.call(null, 1, 2)); // 3 console.log(add.apply(null, [1, 2])); // 3WeakMaps provide a way to extend objects from the outside without interference with garbage collection. Whenever you want to extend an object, but can’t apply a WeakMap because it is sealed-or from an external source.

WeakMaps provide a way to extend objects from the outside without interference with garbage collection. Whenever you want to extend an object, but can’t apply a WeakMap because it is sealed-or from an external source.

var map = new WeakMap(); var pavloHero = { first: "Pavlo", last: "Hero" }; var gabrielFranco = { first: "Gabriel", last: "Franco" }; map.set(pavloHero, "This is Hero"); map.set(gabrielFranco, "This is Franco"); console.log(map.get(pavloHero)); //This is HeroThe interesting aspect of the WeakMaps is that it has a weak reference to the key inside the map. A weak reference means that if the object is destroyed, the garbage collector will remove the entire entry from the WeakMap, freeing up memory.

They both behave differently when the object referred to by their keys / values is deleted. Take the following example code:

var map = new Map();

var weakmap = new WeakMap();

(function() { var a = { x: 12 }; var b = { y: 12 }; map.set(a, 1); weakmap.set(b, 2); })()The above IIFE is executed there is no way we can refer to

{x:12}and{y:12}. The Garbage Collector goes ahead and removes the “WeakMap” key b pointer and also removes{y:12}from memory. But in the case of “Map,” the garbage collector does not remove a pointer from “Map” and does not remove{x:12}from memory.WeakMap allows the garbage collector to do his job, but not Map. With manually written maps, the key array would keep references to key objects, preventing them from being garbage collected. In native WeakMaps, references to key objects are kept “weakly” which means that they do not prevent the collection of garbage in the event that there is no other reference to the object.

If you want to make sure that the object is deep frozen, you need to create a recursive function to freeze each property that is of the object type:

Without deep freeze:

let person = { name: "Leonardo", profession: { name: "developer" } }; Object.freeze(person); // make object immutable person.profession.name = "doctor"; console.log(person); //output { name: 'Leonardo', profession: { name: 'doctor' } }With deep freeze:

function deepFreeze(object) { let propNames = Object.getOwnPropertyNames(object); for (let name of propNames) { let value = object[name]; object[name] = value && typeof value === "object" ? deepFreeze(value) : value; } return Object.freeze(object); } let person = { name: "Leonardo", profession: { name: "developer" } }; deepFreeze(person); person.profession.name = "doctor"; // TypeError: Cannot assign to read only property 'name' of objectSince 1.8.5 it’s possible to seal and freeze the object, so define the above as:

const DaysEnum = Object.freeze({"monday":1, "tuesday":2, "wednesday":3, ...})Or

const DaysEnum = {"monday":1, "tuesday":2, "wednesday":3, ...} Object.freeze(DaysEnum);and voila! JS enums.

However, this does not prevent you from assigning an undesired value to a variable, which is often the main goal of the enums:

let day = DaysEnum.tuesday day = 298832342 // goes through without any errorsSince its release, ES6 has introduced several new features and methods to JavaScript. Among these new features are the method and

constofObject.freeze(). Sometimes people get confused between the methodObject.freeze()and theconstbutObject.freeze()and theconstare completely different.Const:

The

constkeyword generates a read-only reference to a value. The variables generated by theconstkeyword are immutable. In other words, you can’t reassign them to a variety of values. Trying to reassign a constant variable will result in a TypeError.let myName = "indiahires" console.log(myName) // Uncaught TypeError myName = "ih"The

constkeyword ensures that the created variable is read-only. But it doesn’t mean that the actual value to which the reference to the const variable is immutable. Even though the variable person is constant. However, you can change the value of your property. But you can not reassign a different value to a constant person.const person = { name: "indiahires" }; // No TypeError person.name = "ih"; console.log(person.name);Object.freeze() method:

If you want the value of the person object to be immutable, you have to freeze it using the

Object.freeze()method.const person = Object.freeze({name: "indiahires"}); // TypeError person.name = "ih";The method

Object.freeze()is shallow, which means that it can freeze the properties of the object, not the objects referenced by the properties.const person = Object.freeze({ name: 'indiahires', address: { city:"Chandigarh" } }); /*But the person.address object is not immutable, you can add a new property to the person.address object as follows:*/ // No TypeError person.address.country = "India";Final words

const prevents reassignment.

Object.freeze() prevents mutability.

Arrow functions should NOT be used:

- When we want function hoisting – as arrow functions are anonymous.

- When we want to use this/arguments in a function – as arrow functions do not have this/arguments of their own, they depend upon their outer context.

- When we want to use named function – as arrow functions are anonymous.

- When we want to use function as a constructor – as arrow functions do not have their own this.

- When we want to add function as a property in object literal and use object in it – as we can not access this (which should be object itself).

Currying is a pattern where a function with more than one parameter is broken into multiple functions that, when called in series, accumulates all the required parameters one at a time. This technique can be useful to make it easier to read and compose code written in a functional style. It is important to note that if a function is to be cured, it needs to start as a single function, and then break out into a sequence of functions, each of which accepts a single parameter.

There is a lot of mathematical and computer science theory behind currying in functional programming and I encourage you to read more on Wikipedia. Interesting fact: Currying is named after a mathematician Haskell Curry, not the food.

Basic example:

const sum = x => y => x + y; // returns the number 3 sum (2)(1); // returns a function y => 2 + y sum (2);Note that sum (2)(1); produces the same result as we would have had if we had defined it as const sum = (x , y) = > x + y; which we would call const sum = (x , y) = > x + y; Summary (2 , 1);.

If seeing more than one arrow gives you trouble, just realize that you are simply returning everything that is behind the first arrow. In this case it’s returning another function as its return value because JavaScript has first-class functions. Also this approach works, because the nested returned function has access to the scope of its parent function and so conveniently the

y => x + ygets the x from its parent.First class functions

in functional programming JavaScript has first class functions because it supports functions as arguments and return values.

const someFunction = () => console.log ('Hello World!'); const firstClass1 = functionAsArgument => functionAsArgument (); firstClass1(someFunction); // Hello World! is printed out const firstClass2 = () => someFunction; firstClass1 (firstClass2()); // Hello World! is printed outPractical example: curry as a partial application

This is an example of partially applied function versus curry function, both doing the same thing using JQuery “on” function:

const partiallyAppliedOnClick = handler => $ ('button').on ('click', handler); const curryOn = handler => event => $ ('button').on (event, handler); const curryOnClick = handler => curryOn (handler) ('click');Curry functions are neat when used for carrying reusable code containers. You take a function with multiple arguments, and you know that one of those arguments will have a specific value, but the other is undecided. Therefore, by using curry as a fancy partial application, you can create a new function that will allow you to deal with undecided arguments only without repeating your code.

Practical example: curry for functional composition

Functional composition is a concept that is worthy of its own post. The problem we’re trying to solve by currying is that JavaScript is full of object-oriented approaches that can be difficult to use with functional code, especially if you want to be a purist (or a hipster) about it.

In functional composition you have a sequence of functions applied all on the same single argument as f(g(x)). This would be your example:

// Object oriented approach const getUglyFirstCharacterAsLower = str => str.substr (0, 1).toLowerCase (); // Functional approach const curriedSubstring = start => length => str => str.substr(start, length); const curriedLowerCase = str => str.toLowerCase (); const getComposedFirstCharacterAsLower = str => curriedLowerCase (curriedSubstring (0) (1) (str));It is also very popular to use a compose function to compose your functions:

const compose = (...fns) => fns.reduce((f, g) => (...args) => f(g(...args)));Using the above-defined functions

curriedSubstringandcurriedLowerCaseyou can then use compose to do this:const getNewComposedFirstCharacterAsLower = compose (curriedLowerCase, curriedSubstring (0) (1)); if (getComposedFirstCharacterAsLower ('Martin') === getNewComposedFirstCharacterAsLower ('Martin') { console.log ('These two provide equal results.'); }function curry(fn) { if (fn.length === 0) { return fn; } function _curried(depth, args) { return function(newArgument) { if (depth - 1 === 0) { return fn(...args, newArgument); } return _curried(depth - 1, [...args, newArgument]); }; } return _curried(fn.length, []); } function add(a, b) { return a + b; } var curriedAdd = curry(add); var addFive = curriedAdd(5); var result = [0, 1, 2, 3, 4, 5].map(addFive); // [5, 6, 7, 8, 9, 10]Source: JavaScript ES6 curry functions with practical examples

The literal template is a new feature introduced in ES6. It provides an easy way to create multiline strings and perform string interpolation. Template literals allow embedded expressions, also called literal strings.

Previous to ES6, literal templates were referred to as template strings. The literal template is enclosed with the backtick (` x`) character. Placeholders in the literal template are represented by the dollar sign and the curly braces (${expression}). If we need to use the expression in the backticks, we can place the expression in (${expression}).

Basic example:

let str1 = "Hello"; let str2 = "World"; let str = `${str1} ${str2}`; console.log(str);A generator gives us a new way to work with iterators and functions. The generator is a special type of function that can be paused one or more times in the middle and can be resumed later. The function declaration * (function keyword followed by an asterisk) is used to define a generator function.

When the generator is called, the code does not run. Instead, it returns a special object that is called the Generator object to manage the execution. Let’s look at the example of generators in ES6.

To learn more about Generators in ES6, follow this link ES6 Generators.

Basic example:

function* gen() { yield 100; yield; yield 200; } // Calling the Generator Function var mygen = gen(); console.log(mygen.next().value); console.log(mygen.next().value); console.log(mygen.next().value); //Output 100 undefined 200Both

for..ofandfor..instatements iterate across lists; the values iterated on are different though,for..inreturns a list of keys to the object being iterated, whilefor..ofreturns a list of values of the numeric properties of the object being iterated.Here is an example that demonstrates this distinction:

let list = [4, 5, 6]; for (let i in list) { console.log(i); // "0", "1", "2", } for (let i of list) { console.log(i); // "4", "5", "6" }Another distinction is that

for..inoperates on any object; it serves as a way to inspect properties on this object. for..of on the other hand, is mainly interested in values of iterable objects. Built-in objects like Map and Setimplement Symbol.iteratorproperty allowing access to stored values.let pets = new Set(["Cat", "Dog", "Hamster"]); pets["species"] = "mammals"; for (let pet in pets) { console.log(pet); // "species" } for (let pet of pets) { console.log(pet); // "Cat", "Dog", "Hamster" }Although this has been a pretty long article to follow through, I strongly believe that most of us must have learned what kind of ES6 interview questions asked in the interview.

ES6 Basic Interview Questions

1. Mention some popular features of ES6.

Some of the common ES6 features are:

- Supports constants/immutable variables.

- Block scope support for all variables, constants and functions.

- Introduction to arrow functions

- Handling extended parameter

- Default parameters

- Extended and template literals

- De-structuring assignment

- Promises

- Classes

- Modules

- Collections

- Supports Map/Set & Weak-map/Weak-Set

- Localization, meta-programming, internationalization

2. What are the object oriented features supported in ES6.

The object-oriented features supported in ES6 are:

- Classes: We can create classes in ES6. The class function essentially builds a template from which we may later create objects. When a new instance of the class is created, the constructor method is invoked.

- Methods: Static methods can also be found in classes. A static method, unlike an object, is a function that is bound to the class. A static method can’t be called from a class instance.

Let’s take a look at getters and setters for a moment. Encapsulation is a fundamental notion in OOP. Data (object properties) should not be directly accessed or updated from outside the object, which is a crucial aspect of encapsulation. A getter (access) or a setter (modify) are particular methods we define in our class to access or edit a property. - Inheritance: It is also possible for classes to inherit from one another. The parent is the class that is being inherited from, and the child is the class that is inheriting from the parent.

3. Give a thorough comparison between ES5 and ES6.

ES5 ES6 ES5 is the fifth edition of the ECMAScript which was introduced in 2009. ES6 is the sixth edition of the ECMAScript which was introduced in 2015. Primitive data types that are string, boolean, number, null, and undefined are supported by ES5. There are a few additions to the JavaScript data types in ES6. For supporting unique values, a new primitive data type ‘symbol’ was introduced. In ES5, we could define the variables by using the var keyword only. In ES6, in addition to var, there are two new methods to define variables: let and const. Both function and return keywords are used in order to define a function in ES5. An arrow function is a newly added feature in ES6 in which we don’t require the function keyword in order to define the function. In ES5, a for loop is used to iterate over elements. ES6 introduced the idea of for…of loop in order to iterate over the values of the iterable objects. 4. What is the difference between let and const? What distinguishes both from var?

When declaring any variable in JavaScript, we used the var keyword. Var is a function scoped keyword. Within a function, we can access the variable. When we need to create a new scope, we wrap the code in a function.

Both let and const have block scope. If you use these keywords to declare a variable, it will only exist within the innermost block that surrounds them. If you declare a variable with let inside a block (for example, if a condition or a for-loop), it can only be accessed within that block.

The variables declared with the let keyword are mutable, which means that their values can be changed. It’s akin to the var keyword, but with the added benefit of block scoping. The variables declared with the const keyword are block-scoped and immutable. When variables are declared with the const keyword, their value cannot be modified or reassigned.

5. Discuss the arrow function.

In ES6, arrow functions are introduced. The shorthand syntax for writing ES6 functions is arrow functions. The arrow function’s definition consists of parameters, followed by an arrow (=>), and the function’s body.

The ‘fat arrow’ function is another name for the Arrow function. We won’t be able to employ them as constructors.

const function_name = (arg_1, arg_2, arg_3, ...) => { //body of the function }Few things to note:

- It reduces the size of the code.

- For a single-line function, the return statement is optional.

- Bind the context lexically.

- For a single-line statement, functional braces are not required.

- Doesn’t work with new

6. When should one not use arrow functions?

One should not use arrow functions in the following cases:

- Function Hoisting, Named Functions:

As arrow functions are anonymous, we cannot use them when we want function hoisting or when we want to use named functions.

- Object methods:

var a = { b: 7, func: () => { this.b--; } }The value of b does not drop when you call a.func. It’s because this isn’t bound to anything and will inherit the value from its parent scope.

- Callback functions with dynamic context:

var btn = document.getElementById('clickMe'); btn.addEventListener('click', () => { this.classList.toggle('on'); });We’d get a TypeError if we clicked the button. This is due to the fact that this is not bound to the button, but rather to its parent scope.

- this/arguments:

Since arrow functions don’t have this/arguments of their own and they depend on their outer context, we cannot use them in cases where we need to use this/arguments in a function.

7. What is the generator function?

This is a newly introduced feature in ES6. The Generator function returns an object after generating several values over time. We can iterate over this object and extract values from the function one by one. A generator function returns an iterable object when called. In ES6, we use the * sign for a generator function along with the new ‘yield’ keyword.

function *Numbers() { let num = 1; while(true) { yield num++; } } var gen = Numbers(); // Loop to print the first // 5 Generated numbers for (var i = 0; i < 5; i++) { // Generate the next number document.write(gen.next().value); // New Line document.write("<br>"); }Output:

1 2 3 4 5The yielded value becomes the next value in the sequence each time yield is invoked. Also, generators compute their output values on demand, allowing them to efficiently represent expensive to compute sequences or even infinite sequences.

8. What is the “spread” operator in ES6?

The list of parameters is obtained using the spread operator. Three dots (…) are used to represent it. The spread operator divides an iterable (such as an array or a string) into individual elements. It’s mostly used in JavaScript to make shallow copies of JS. It improves the readability of your code by making it more concise.

The spread operator can be used to join two arrays together or to concatenate them.

let arr1 = [4, 5, 6]; let arr2 = [1, 2, 3, ...arr1, 7, 8, 9, 10]; console.log(arr2);Output:

[ 1 2 3 4 5 6 7 8 9 10 ]9. Explain Destructuring in ES6.

Destructuring was introduced in ES6 as a way to extract data from arrays and objects into a single variable. It is possible to extract smaller fragments from objects and arrays using this method. The following is an example.

let greeting =['Good','Morning']; let [g1, g2] = greeting; console.log (g1, g2);Output:

Good Morning10. What are Promises in ES6?

Asynchronous programming is a concept found in JavaScript. The processes are run separately from the main thread in asynchronous programming. Promises are the most convenient approach to deal with asynchronous programming in ES6. Depending on the outcome of the procedure, a promise can be refused or resolved. Callbacks were used to cope with asynchronous programming before promises were introduced in ES6.

However, it caused the problem of callback hell, which was addressed with the introduction of promises.

(A callback is a function that is performed after another function has completed. When working with events in JavaScript, callback is very useful. As an argument to another function, we pass a function into another function.

When we use callbacks in our web applications, it’s common for them to get nested. Excessive callback usage clogs up your web application and leads to callback hell.)

11. Explain the Rest parameter in ES6.

It’s a new feature in ES6 that enhances the ability to manage arguments. Indefinite arguments can be represented as an array using rest parameters. We can invoke a function with any number of parameters by utilizing the rest parameter.

function display(...args) { let ans = 0; for (let i of args) { ans *= i; } console.log("Product = "+ans); } display(4, 2, 3);Output:

Product = 2412. Discuss the template literals in ES6.

Template literals are a brand-new feature in ES6. It makes producing multiline strings and performing string interpolation simple.

Template literals, also known as string literals, allow for embedded expressions.

Template literals were referred to as template strings prior to ES6. The backtick (“) character is used to enclose template literals. The dollar sign and curly brackets (${expression}) are used to denote placeholders in template literals. If we need to use an expression within the backticks, we can put it in the (${expre

- SQL Interview Questions and Answers

SQL, or Structured Query Language, is a language that is used to interact or communicate with a database. This language assists in performing tasks like retrieval, insertion, updating, and deletion of data from databases. This information is commonly asked in SQL interview questions. An ANSI (American National Standards Institute) standard, SQL helps developers execute queries, insert records in tables, update records, create databases, create tables, or delete tables.

No doubt other programming languages such as ASP, PHP, and ColdFusion helped in making the Internet very dynamic, but SQL has revolutionized how users interact with websites in general. Any reputed webpage that allows the user to provide content uses SQL.

Most Frequently Asked SQL Interview Questions

Here in this article, we will be listing frequently asked SQL Interview Questions and Answers with the belief that they will be helpful for you to gain higher marks. Also, to let you know that this article has been written under the guidance of industry professionals and covered all the current competencies.

1. What is SQL and explain its components?

SQL is a standard language which is used to accessing and manipulating databases. It stands for Structured Query Language. It can be used to develop a web application for a server-side scripting language, like ASP, PHP, etc.

It consists of three components that are listed below:-

- Data Definition Language.

- Data Manipulation Language.

- Data Control Language.

2. Explain the types of joins in SQL?

In SQL, Joins is used to merge records from two or more tables in a database, based on a related column between them.

Here are the four types of the joins in SQL

- INNER JOIN: It returns records that have matching values in both tables

- LEFT JOIN: It returns all records from the left table and the matched records from the right table

- RIGHT JOIN: Right Join returns all records from the right table and the matched records from the left table

- FULL JOIN: It returns all records when there is a match in either left or right table

3. What is a Primary key? Explain

A primary key is a column in a table that designated to uniquely identifies the rows in that table. Primary key holds a unique value for each row and cannot contain null values.

4. Explain Constraints in SQL?

It is a rule used to limit the type of data that can insert into a table, to maintain the integrity and accuracy of the data inside the table.

It can be divided into two types.

- Column level constraints

- Table-level constraints

These are the most common constraints that can be applied to a table.

- NOT NULL

- DEFAULT

- UNIQUE

- PRIMARY KEY

- FOREIGN KEY etc

5. What is the difference between HAVING and a WHERE in SQL?

WHERE clause is used for filtering rows, and it applies to every row but HAVING term is used to filter groups.

WHERE can be used without the GROUP BY but HAVING clause cannot be used without the GROUP BY.

6. Explain the difference between SQL and MySQL.

- Mysql is available free because it is open source, but SQL is not an open source.

- Mysql offers updateable views, but SQL offers indexed views which are more powerful.

- Mysql does not support XML, but SQL supports XML.

- Mysql not supported Auto tuning but SQL can.

- Mysql did not support User-defined functions, but SQL supported.

- Mysql support Transaction is very much limited but SQL support extensively and entirely offered.

- Mysql not offered Stored procedures and full joins but offered in SQL.

- Mysql does not support Cursor feature but is there in SQL

7. What is Trigger in SQL? Explain

In SQL, a trigger is a database object that is associated with a table. It will be activated when a defined action is executed for the table. It can be performed when you run one of the following SQL like INSERT, UPDATE and DELETE occurred in a table. It’s activation time can be BEFORE or AFTER.

8. Explain Normalization and what are the advantages of it?

It is a systematic approach of decomposing tables to remove data redundancy and undesirable characteristics like Insertion, Update and Deletion. Normalization is a multi-step process that put data into the tabular form and removing duplicated data from the tables.

Advantages

- It helps to the reduction of redundant data.

- It has a much more flexible database design.

- It is better to handle on database security.

- It is dealing with data consistency within the database.

Rule of Normalization

- 1NF(First Normal Form)

- 2NF(Second Normal Form)

- 3NF(Third Normal Form)

- BCNF(Boyce and Codd Normal Form)

- 4NF(Fourth Normal Form)

9. How to find 3rd highest salary of an employee from the employee table in SQL?

SELECT salary FROM employee e1 WHERE 3-1 = (SELECT COUNT(DISTINCT salary) FROM employee e2 WHERE e2.salary > e1.salary)It is used to rename a table in a specific SQL statement. Renaming is a temporary change without change actual table name in the database. It can be applied to tables as well as fields.

The basic syntax of a table alias

SELECT field1, field2 FROM TABLE_NAME AS TABLE WHERE "WRITE_YOUT_CONDITION";The basic syntax of a column alias

SELECT field1 AS column1 FROM TABLE_NAME WHERE "WRITE_YOUT_CONDITION";11. Explain Scalar functions in SQL?

It is a function that takes one or more values but returns a single value. It is based on user input and returns a single value.

- UCASE()

- LCASE()

- MID()

- LEN()

- ROUND()

- NOW()

- FORMAT()

12. Explain Aggregate functions are available there in SQL?

The aggregate function performs a calculation on a set of values, and it returns a single value as output. It ignores NULL values when it performs calculation except for the COUNT function.

SQL provides many aggregate functions that are listed below.

- AVG()

- COUNT()

- SUM()

- MIN()

- MAX() etc

13. How to make a copy values from one column to another in SQL?

UPDATE `table_name` SET new_field=old_field14. What are the advantages of SQL? Explain

There are many advantages of SQL, and some of them are mentioned below:

- No coding needed: It is easy to manage without any need to write the substantial amount of code

- Well defined standards: Long established are used by the SQL databases that are being used by ISO and ANSI.

- Portability: We can use this program in PCs, servers, laptops and mobile phones.

- Interactive Language

- Multiple data views

It was initially developed in the early 1970s at IBM by Donald D. Chamberlin and Raymond F. Boyce.

16. What is a Database? Explain

It is a collection of data that is organized to be easily accessed, managed and updated. It is distributed into rows, columns, and tables. It’s indexed to make it easier to find related information.

17. Explain table and field in SQL?

Table: It is a collection of related data that consists of rows and columns. It has a specified number of columns but can have any number of rows.

Field: It is a column in a table that is designed to maintain specific information about all records in the table.

18. Explain Unique key in SQL.

It is a set of one or more than one fields or columns of a table that uniquely identify a record in the table. It is little like a primary key, but it can accept only one null value.

19. Explain Foreign key in SQL?

It is a collection of fields in one table that uniquely identifies a row of another table. In simple words, the foreign key is defined in a second table, but it refers to the primary

20. Write a query to display the current date in SQL?

SELECT CURDATE();21. Explain the difference between DROP and TRUNCATE commands in SQL?

TRUNCATE

- It removes all rows from a table.

- It does not require a WHERE clause.

- Truncate cannot be used with indexed views.

- It is performance wise faster.

DROP

- It removes a table from the database.

- All table’s rows, indexes, and privileges will also be removed when we used this command.

- The operation cannot be rolled back.

You can download here sql interview questions pdf after registeration or subscribe.

22. Write a query to find the names of users that begin with “um” in SQL?

SELECT * FROM employee WHERE name LIKE 'um%';It is the source of a rowset to be operated upon in a DML statement. These clauses are very common which is used with Select statement, Update statement and Delete statement.

SQL provides with the following clauses that are given below:

- WHERE

- GROUP BY

- HAVING

- ORDER BY etc

24. How to use distinct and count in SQL query? Explain

count(): It returns the number of rows in a table satisfying the condition specified in the WHERE clause. It would return 0 if there were no matching conditions.

Syntax:

SELECT COUNT(*) FROM category WHERE 'status' = 1;distinct(): It is used to return only different values. In a table, a column often contains many duplicate values, and sometimes we want to list the different values. It can be used with aggregates functions.

Syntax:

SELECT DISTINCT class FROM College ORDER BY department25. What has stored procedures in SQL and how we can use it?

It is a set of precompiled SQL statements that are used to perform a particular task. A procedure has a name, a parameter list, and SQL statement, etc. It will help in reduce network traffic and increase the performance.

Example

CREATE PROCEDURE simple_function AS SELECT first_name, last_name FROM employee; EXEC simple_function; - React js interview questions and Answers

ReactJS is a JavaScript library for building reusable User interface components. It is a template-based language combined with different functions that support the HTML. Our recently updated React Interview Questions can help you crack your next interview. This means the result of any React’s operation is an HTML code. You can use ReactJS to build all kinds of single-page applications.

Short Questions About React What is the latest version of React? 17.0.2, released on March 22, 2024. When was React first released? 29th May 2013 React JS is Created By Jordan Walke License of ReactJS MIT License What’s In It For Me?

We have created this section for your convenience from where you can navigate to all the sections of the article. All you need to just click or tap on the desired topic or the section, and it will land you there.

React Developer Interview Questions

Here in this article, we will be listing frequently asked React js interview questions and Answers with the belief that they will be helpful for you to gain higher marks. Also, to let you know that this article has been written under the guidance of industry professionals and covered all the current competencies.1. What is the differnece between Virtual DOM and Real DOM?

Real DOM Virtual DOM The update process is very slow in this. The update process is much faster here. It can directly update the HTML. It can’t directly update the HTML. It creates a new DOM if the element is updated. It updates the JSX if there is any update on an element. DOM manipulation related to a Real DOM is very complicated. DOM Manipulation related to a Virtual DOM is effortless. There is a lot of memory wastage in a Real DOM. There are no cases of memory wastage in a Virtual DOM. 2. Why should we not update the state directly?

One should never update the state directly because of the following reasons:

- If you update it directly, calling the setState() afterward may just replace the update you made.

- When you directly update the state, it does not change this.state immediately. Instead, it creates a pending state transition, and accessing it after calling this method will only return the present value.

- You will lose control of the state across all components.

Note: Out of all the react questions, this is one that actually helps the interviewer to judge your level of understanding in this subject. Read the answer carefully to understand it properly.

3. How to call the function in render to react?

To call a function inside a render in React, use the following code:

Example

import React, { Component, PropTypes } from 'react'; export default class PatientTable extends Component {

constructor(props) {

super(props);

this.state = {

checking:false

};

this.renderIcon = this.renderIcon.bind(this);

} renderIcon(){

console.log("came here")

return(

<div>Function called</div>

)

} render() { return (

<div className="patient-container"> {this.renderIcon} </div>

);

}

}Props stand forProperties in React. They are read-only components that must be kept pure. These components are passed down from the parent to the child component throughout the app. A child component cannot send the prop back to the parent component. This can help in maintaining the unidirectional data flow and is used to render the dynamically generated data.

5. What are the new features in React?

The latest release of React is 16.6.9 and here are its newest features:

- Measuring performance using

- Improved Asynchronous act() for testing

- No more crashing when calling findDOMNode() inside a tree

- Efficient handling of infinite loops caused due to the setState inside of useEffect has also been fixed

JSX stands for JavaScript XML. It is a file type used by React that utilizes the expressiveness of JavaScript along with HTML. This makes the HTML file easy to understand. This file makes applications robust and boosts their performance.

Example

render(){

return(

<div> <h1> Welcome To Best Interview Question!!</h1> </div>

);

}7. What are controlled and uncontrolled components?

A Controlled Component is one that takes the current value through the props and notifies of any changes through callbacks such as onChange.

Example:

<input type=”text” value={value} onChange={handleChange} />

An uncontrolled component is one storing its own state internally, and you can query the DOM via a ref to find its current value as and when needed. Uncontrolled components are a bit closer to traditional HTML.

Example:

<input type=”text” defaultValue=”foo” ref={inputRef} />

Note: React is a widely used open-source library used for building interactive user interfaces. It is a web platform licensed under MIT. This is one of the very popular react interview questions.

8. What is function based component in React?

Basically Function Based Component are the component which are made by JavaScript Functions.It takes props as argument and return JSX.

Example

Import React from ‘react’;

const XYZ = (props ) => {

<div>

<h1>React Interview Questions</h1>

</div>

}

export default XYZ;9. What is the difference between React Native and React?

React React Native Released in the year 2013. Released in the year 2015. It can be executed on multiple platforms. It is not platform-independent. It will need some extra effort to work on different platforms. Generally used for developing web applications. Generally used for developing mobile applications. Here, Virtual DOMs are used to render browser code. Here, an in-built API is used to render code for mobile applications. It uses the JS library and CSS for animations. It has its own in-built animation libraries. It uses the React Router to navigate through web pages. It has an in-built navigator to navigate through mobile application pages. 0. What is the difference between Element and Component?

React Component React Element It is a simple function or class used for accepting input and returning a React element It is a simple object used for describing a DOM node and the attributes or properties as per your choice. The React Component has to keep the references to its DOM nodes and also to the instances of the child components. The React Element is an immutable description object, and in this, you cannot apply any methods. 11. What are refs used for in React?

Refs are a function in React having the following uses:

- Accessing DOM and React elements that you have created.

- Changing the value of a child component without using props and others.

12. How React is different from Angular?

ReactJS AngularJS This is an open-source JS library used for building user interfaces and uses a controller view pattern. This is a JS-based open-source front end web framework and uses an MVC based pattern. It is developed and maintained by Facebook Developers. It is developed and maintained by Google Developers. Based on the JSX programming language. Based on Javascript and HTML language. It is straightforward to learn. Comparatively, it is difficult to learn. It supports server-side rendering. It supports client-side rendering. It has a uni-directional data binding methodology. It has a bi-directional data binding methodology. It is based on a Virtual DOM. It is based on a regular DOM. Note: Before getting confused with the plethora of React coding interview questions, you need to ensure the basics. This question will help you to understand the basics of reactjs and make you even more confident enough to jump onto the advanced questions.

3. What is the difference between componentWillMount and componentDidMount?

componentWillMount() componentDidMount() This is called only once during the component lifecycle immediately before the component is rendered to the server. It is used to assess props and do any extra logic based upon them. It is called only on the client end, meaning, it is usually performed after the initial render when a client has received data from the server. Used to perform state changes before the initial render. It allows you to perform all kinds of advanced interactions including state changes. No way to “pause” rendering while waiting for the data to arrive. It can be used to set up long-running processes within a component. It is invoked just immediately after the mounting has occurred. Putting the data loading code in this just ensures that data is fetched from the client’s end. Users should avoid using any async initialization in order to minimize any side-effects or subscriptions in this method. Here, the data does not load until the initial render is done. Hence, users must set an initial state properly to eradicate undefined state errors. 14. What is the difference between Function Based Component and Class Based Component?

The Main Difference is of Syntax. The function-Based component used props as an argument and is also called stateless component and In class-based component, we extend all the property of predefined function and used according to our need.

Class Based Component use state and the state is used as props in child component.In Class Based component we can use different lifecycle method of component.

15. What are arrow functions? How are they used?

Arrow functions, also called ‘fat arrow‘ (=>), are brief syntax for writing the expression. These functions bind the context of the components because auto binding is not available by default in ES6. Arrow functions are useful if you are working with the higher-order functions.

Example

//General way

render() {

return(

<MyInput onChange={this.handleChange.bind(this) } />

);

} //With Arrow Function

render() {

return(

<MyInput onChange={ (e) => this.handleOnChange(e) } />

);

}16. What is the purpose of render() in React?

It is compulsory for each React component to have a render(). If more than one element needs to be rendered, they must be grouped inside one tag such as <form>, <group>,<div>. This function goes back to the single react element which is the presentation of native DOM Component. This function must return the same result every time it is invoked.

17. What is a state in React and how is it used?

States are at the heart of components. They are the source of data and must be kept simple. States determine components’ rendering and behavior. They are mutable and create dynamic and interactive components, and can be accessed via this.state().

Note: From the vast number of react developer interview questions, answering this will help you to align yourself as an expert in the subject. This is a very tricky question that often makes developers nervous.

18. What is the component lifecycle in ReactJS?

Component lifecycle is an important part of ReactJS. Component lifecycle falls into three categories – property updates, Initialization and Destruction. Lifecycle is a method of managing the state and properties of the components.

Events are the triggered reactions to actions like mouse hover, click, key press, etc.

Redux is used to handle data in a reliable manner. It performs task accurately and ensures that the data has been controlled. You can also apply filters if only a specific part of data is required.

NOTE: If you are looking Redux Interview Questions then you can visit here.

Flux is an illustration that helps to maintain a unidirectional data stream. It also controls construed data unique fragments and makes them interface without creating issues. The configuration of Flux is insipid and is not specific to applications.

The server needs to be regularly monitored for updates. The aim is to check for the presence of new comments. This process is considered as pooling. It checks for the updates almost every 5 seconds. Pooling helps keep an eye on the users and ensure there is no negative information on servers.

23. In what cases would you use a Class Component over a Functional Component?

If your component has a state or lifecycle method, use Class component. Else, a Functional component.

24. What can you do if the expression contains more than one line?

Enclosing the multi-line JSX expression is the best option. Sometimes it becomes necessary to avoid multi-lines in order to perform the task reliably and for getting the expected results.

25. Is it possible to use the word Class in JSX?

No. This is because the word Class is an occupied word in JavaScript. If you use the word Class JSX will get translated to JavaScript.

Note: Before getting confused with the plethora of react coding interview questions, you need to ensure the basics. This question will help you to understand the core basics of react and make you even more confident enough to jump onto the advanced questions.

26. Is it possible to display props on a parent component?

Yes. This is a complex process, and the best way to do it is by using a spread operator.

React is a JavaScript Library which is used to show content to user and handle user interaction.

Component is a building block of any React app which is made by either JavaScript Function of Class. Ex. Header Component, Footer Component etc.

There are two types of Components.

- Function Based Component

- Class Based Component

29. What are Class Based Component?

Class Based Component is the JavaScript Class. In these Components we extends the JavaScript predefined class into our component.

Example

Import React,{Component} from ‘react’; class ABC extends Component { render(){ return( <div> <h1>Hello World</h1> </div> ); }} export default ABC;30. What is a Higher Order Component (HOC)?

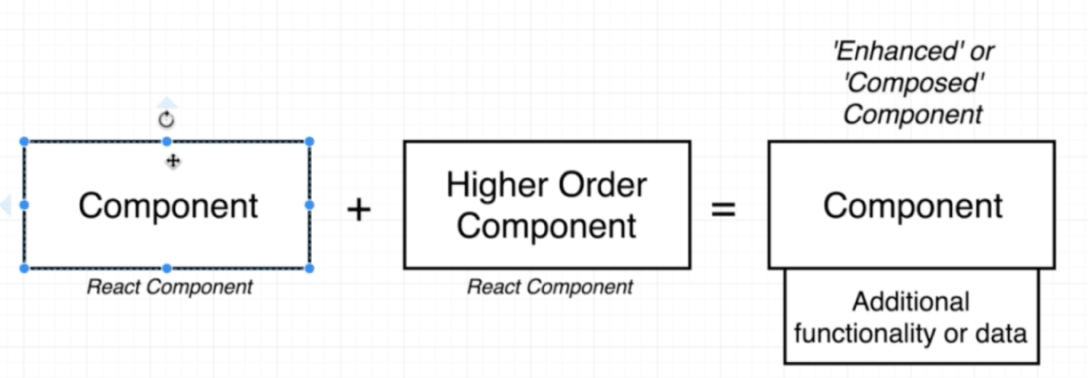

A higher-order component or HOC in React is basically an advanced technique used for reusing component logic. HOCs are not a part of the React API. They are a pattern emerging from the React’s compositional nature.

A higher-order component is any function that takes an element and returns it with a new item. The term pure function in React means that the return value of the function is entirely determined by its inputs and that the execution of the function produces no side effects.

The ReactDOMServer is an object which enables you to render components as per a static markup. Generally, it is used on a Node Server.

33. How to apply validation on props in React?

The App.proptypes is used for validating props in React components.

Here is the syntax:

class App extends React.Component {

render() {}

}

Component.propTypes = { /*Definition */};34. How to create a component in react js?

There are 3 ways to create a component in React:

- Using a depreciated variable function

- Using a Class

- Using a Stateless Functional Component

1. Using a depreciated variable function

In this, you need to declare a variable in your Javascript file/tag and use the React.createClass() function to create a component using this code.

var MyComponent = React.createClass({

render() {

return <div>

<h1>Hello World!</h1>

<p>This is my first React Component.</p>

</div>

}

})Now, use the div element to create a unique ID for the specific page and then link the above script onto the HTML page.

<div id=”react-component”></div>Use

ReactDOM.render()function to take 2 arguments, the react component variable and the targeted HTML page.ReactDOM.render(<MyComponent />, document.getElementById('react-component'))Finally, save the HTML page to display the result like this one below:

2. Using a Class

Javascript has lately started supporting classes. Any React developer can easily create a class using the class extends (Inherits). Following is the code

3. Using a Stateless Functional Component

It might sound a bit complex, but this basically means that a normal function will be used in place of a variable to return a react component.

Create a const called MyComp and set it as equal to a () function. Use the arrow function, => to declare the logic of the function as follows:

const MyComponent = () => {

return <div>

<h1>Hello!</h1>

<p>This is my first React Component.</p>35. How to link one page to another page in react js?

There are two ways to link one page to another to React:

Using React Router: Use the feature from the react-router to link the first page to the second one.

<Link to ='/href' ></Link>36. How to reload the current page in react js?

Reloading/Refreshing a page using Javascript:

Use Vanilla JS to call the reload method for the specific page using the following code:window.location.reload(false);37. How to redirect to another page in react js?

The best way to redirect a page in React.js depends on what is your use case. Let us get a bit in detail:

If you want to protect your route from unauthorized access, use the component and try this:

Example

import React from 'react'

import { Redirect } from 'react-router-dom'

const ProtectedComponent = () => {

if (authFails)

return <Redirect to='/login' />

}

return <div> My Protected Component </div>

}38. How do you reference a DOM element in react?

In React, an object this.refs which is found as an instance inside the component can be used to access DOM elements in Reactjs.

Note: One of the most tricky react advanced interview questions, you need to answer this question with utmost clarity. Maybe explaining it with a short example or even a dry run would help you get that dream job.

React Hooks were brought in by the React team to efficiently handle state management and the side-effects in function components. React Hooks is a way of making the coding effortless. They help by enabling us to write React applications by only using React components.

0. How to call one component from another component in reactjs?

To render a component with another component in Reactjs, let us take an example:

Let us suppose there are 2 components as follows:

Example

Component 1 class Header extends React.Component{ constructor(){

super();

} checkClick(e, notyId){

alert(notyId);

}

} export default Header; Component 2 class PopupOver extends React.Component{ constructor(){

super();

} render(){

return (

<div className="displayinline col-md-12 ">

Hello

</div>

);

}

} export default PopupOver;

Now to call the Component 1 in Component 2, use this code: import React from 'react'; class Header extends React.Component { constructor() {

super();

} checkClick(e, notyId) {

alert(notyId);

} render() {

return (

<PopupOver func ={this.checkClick } />

)

}

}; class PopupOver extends React.Component { constructor(props) {

super(props);

this.props.func(this, 1234);

} render() {

return (

<div className="displayinline col-md-12 ">

Hello

</div>

);

}

} export default Header;React Router is the in-built routing library for React. React Router helps to keep your UI in sync with the respective URL. It works on a simple API having powerful features like lazy loading, locational transition, and dynamic route matching built right in.

42. How to render an array of objects in react?

Now, there are two ways to render this object as an array,

First:

render() {

const data =[{“name”:”test1″},{“name”:”test2″}];

const listItems = data.map((d) => <li key={d.name}>{d.name}</li>);return (

<div>

{listItems }

</div>

);

}Or, you could directly write the map function in the return

render() {

const data =[{“name”:”test1″},{“name”:”test2″}];

return (

<div>

{data.map(function(d, idx){

return (<li key={idx}>{d.name}</li>)

})}

</div>

);

}43. What is the difference between state and props in React?

State Props This is the data maintained inside the component. This is the data passed in from a parent component. The component within shall update itself using the setState function. These are read-only functions present in the child component and have to be updated manually. Note: React is a widely used open-source library used for building interactive user interfaces. It is a web platform licensed under MIT. This is one of the very popular react interview questions.

44. Which lifecycle method gets called by react as soon as a component is inserted into the DOM?

45. How to use the spread operator to react?

46. What are the steps to implement search functionality in reactjs?

47. How to define property in the react component?

48. What is StrictMode in React?

49. How to avoid prop drilling in React?

You can avoid Prop Drilling in the following ways:

- Using a Context API

- Using Render Props

50. What are the key benefits of ReactJS development?

Key Benefits of ReactJS Development

- Allows Developers to Reuse the Components

- It is Much Easier to Learn

- The Benefit of Having JavaScript Library

- SEO Friendly

- It ensures faster rendering

- The Increase in Community Base

- The Support of Handy Tools

- Scope for Testing the Codes etc

51. What are synthetic events?

It is a cross-browser wrapper that is around the native event of the same browser. This is the most important question in react interview questions.

Top 20 React.Js Interview Questions

Here you will find the list of Top React interview questions, which were written under the supervision of industry experts. These are the most common questions and usually asked in every interview for the role of the React Developers.

- What is HOC?

- What are the difference between Rest and Spread Operator?

- What are the key benefits of using Arrow functions?

- What is Pure Component?

- What are Controlled and Uncontrolled components with example?

- What is Refs?

- What is the difference between class based component & function based components?

- How to work React?

- Why virtual Dom is faster than real dom?

- How does virtual Dom work in react?

- What are the difference between null & undefined?

- Angular 8 Interview Questions and Answers

Angular is one of the foremost frameworks, has released its latest version namely Angular 8 on 23rd of May, 2019. The latest version of Angular is incorporated with unique and attractive features. In general, Angular 8 supports new and unique features such as CLI (Command Line Interface), core framework, and angular material library. Angular 8 Interview Questions contain these important questions and answers. Read them carefully and score well.

Quick Facts About Angular 8 What is the latest version of Angular? Angular 11.0 released on 11th November 2024 When did the Angular 8 release? 28th May 2019 What language does Angular use? TypeScript Who is the developer of Angular 8? Google This is a list of the most frequently asked Angular 8 interview questions and answers. Please have a good read and if you want to download it, it is available in a PDF format so that you can brush up your theoretical skills on this subject.

Most Frequently Asked Angular 8 Interview Questions

Here in this article, we will be listing frequently asked Angular 8 Interview Questions and Answers with the belief that they will be helpful for you to gain higher marks. Also, to let you know that this article has been written under the guidance of industry professionals and covered all the current competencies.1. What is the difference between Angular 7 and Angular 8?

Angular 7 Angular 8 Angular 7 is difficult to use Angular 8 is easier to use It has features such as Virtual scrolling, CLI prompts, Application performance, Drag, and drop, Bundle budget, Angular compiler, Angular elements, NativeScript, Better error handling, etc. It has unique and advanced level features such as Differential Loading, Ivy rendering Engine, API builders, Bazel support, Support for $location, Router backward compatibility, Opt-In Usage sharing, web- workers, etc. Breaking changing in Angular 7 are- Component Dev Kit (CKD), Material design library and virtual scrolling. Breaking changing in Angular 8 are- Core framework, Angular, material library and CLI. It will support a lower version of typescript version 3.4. It will not support a lower version of typescript version 3.4. It supports all types Node.js version It supports Node.js version 12 or later 2. What is bazel in Angular 8?

In Angular 8, Bazel is a new build system and it is available for a short period of time. It is the new feature of Angular 8, which provides a platform to make your backends and frontends with a similar tool. It has the possibility to have remote builds as well as cache on the build farm.

The main features of Bazel are-

- It is an internal build tool, through which application can be customized.

- It also tests the action and performance of multiple machines.

- It constructs a graph through which you can identify the useful information.

- It also supports customization.

3. What is the purpose of Wildcard route?

Wildcard routing is used in Angular 8 for defining the route of pages. Specific changes/updates can be made when defining the route using Wildcard.

4. What is the difference between promise and observable in angular8?

Observables Promises Both synchronous as well as asynchronous Always asynchronous Can emit multiple values Provides only one single value It is lazy It is eager 5. What is the purpose of Codelyzer?

Codelyzer is an open-source tool in Angular 8 whose main function is to check for errors in codes not following pre-defined guidelines. It runs on the tslint.json file and checks only the static code in Angular 8.

6. What are the new features in angular 8?

Angular 8 it has following new features such as

- Differential loading– It is a technique that automatically makes your angular applications more performant. When you build applications for production, two bundles are created- One bundle for Modern browsers that support ES6+ and another bundle for older browsers that only support ESS.

- Dynamic imports for lazy routes– In Angular version 8 there is nothing new in the concept of lazy routes itself but the syntax has totally changed. In the older version of Angular CustomString Syntax is used, but angular 8 uses standard dynamic import syntax so the syntax which is customized to Angular is migrated to industrial standard.

- Ivy rendering Engine– It translates the templates and components into regular HTML and javascript that the browser can interpret and understand.

- Bazel– It is a building tool through which angular developer can build backends and frontends.

7. What are the limitations of Web Workers?

Here are the limitations of a Web Worker:

- A web worker cannot directly manipulate the DOM

- It has limited access to methods and properties of the window object.

- It cannot be run directly from the file system. A web worker needs a server to run.

8. How Performance Improvements on the core in Angular 8?

Angular 8 has advanced level features which ensure systematic workflow and performance improvements. It has apparent features such as differential loading, CLI workflow improvements, Dynamic imports for lazy routes, Ivy rendering engine, Bazel, etc.

9. Why we used Service Workers in Angular?

A Service Worker is used in Angular 8 to build the basic steps of converting an application into a Progressive Web App (PWA). Service workers function as network proxies and intercepting all outgoing HTTP requests made by the application and how to respond.

10. How to upgrade angular 7 to 8?

Steps to upgrade Angular 7 to 8

- Install TypeScript 3.4

- Use Node LTS 10.16 or its advanced version

- Run command on terminal panel/CLI -> ng update @angular/cli@angular/core

Alternative- use URL link- https://update.angular.io/

11. How can you hide an HTML element just by a button click in Angular?

Ng-hide command is used to hide HTML elements if an expression is true.

Here’s an example:

<div ng-app="DemoApp" ng-controller="DemoController">

<input type="button" value="Hide Angular" ng-click="ShowHide()"/>

<div ng-hide="IsVisible">Angular 8</div>

</div>

<script type="text/javascript">

var app = angular.module('DemoApp',[]);

app.controller('DemoController',function($scope){

$scope.IsVisible = false;

$scope.ShowHide = function(){

$scope.IsVisible = $scope.IsVisible = true;

}

});

</script>Now in the above, when the Hide Angular button is not clicked(the expression is set to false)

12. Why typescript is used in angular 8?

Angular uses TypeScript because:

- It has a wide range of tools

- It’s a superset of Angular

- It makes abstractions explicit

- It makes the code easier to read and understand.

- It takes most of the usefulness within a language and brings it into a JS environment without forcing you out.

13. What are new features in Angular 9?

Here are the top new features of Angular 9

- An undecorated class migration schematic added to the core.

- Numeric Values are accepted in the formControlName.

- Selector-less directives have now been allowed as base classes in View Engine in the compiler.

- Conversion of ngtsc diagnostics to ts.Diagnostics is possible

14. What is the use of RxJS in angular8?

Angular 8 uses observables which are implemented using RxJS libraries to push code. The main job of RxJS is to work with asynchronous events.

NgUpgrade in Angular 8 is a library which is used to integrate both Angular and AngularJS components in an application and also help in bridging the dependency injection systems in both Angular & AngularJS.

16. Which command is used to run static code analysis of angular application?

The

ng lintcommand is used to run static code analysis within an Angular application.17. What is HostListener and HostBinding?

18. What is authentication and authorization in Angular?

Authentication Authorization Process of verifying the user Process of verifying that you have relevant access to any procedure Methods: Login form, HTTP Authentication, HTTP digest, X 509 Certificates, and Custom Authentication method. Methods: Access controls for URL, secure objects and methods and Access Control Lists (ACL) Steps for the Installation of Angular 8 environmental setup

Step 1

Before installing Angular IDE using Angular CLI tool, make sure that Node.js has already installed in your system.

- 1. If Node.js is not installed in your system install it using the following steps.

- The basic requirement of Angular 8 is Node.js version 110.9.0 or later.

- Download it using https//nodejs.org/en/

- Install it on your system

- Open node.js command prompt

- Check the version run command, node-v in the console window

Step 2

In order to install Angular CLI, use the following commands

2) npm install –g @angular/cli or npm install –g @angular/cli@latestStep 3

To check the node and angular CLI version, run command ng –version on the console terminal

20. What is runGuardsAndResolvers in Angular 8?

Angular 8 introduced a number of new and unique options to runGuardsAndResolvers. In general, runGuardsAndResolvers is an option which is used for the Angular router configuration in order to control the resolvers and guards. The first option available in runGuardsAndResolvers is pathParamsChange. Through, this option router will re-run the guards and resolvers. Whenever you want to control over the resolvers and guards, use runGuardsAndResolvers option in Angular 8.

21. Why Incremental DOM is Tree Shakable?

In Angular 8, the framework does not interpret components in an incremental DOM. It uses component references instructions, and if it does not refer to a particular instruction, it shall be left unused. Now, VIrtual DOM requires an external interpreter. Hence, not knowing which components to display, everything is shifted to the browser, making the DOM shakeable.

22. What is the difference between real Dom and virtual Dom?